This is the documentation for the Assignment 2: Image Processing on the kPhone 469s of CMU 15-473/673 Visual Computing System. The task it to implement a simple image processing pipeline for the data produced by the image sensor of the much-anticipated kPhone 469s.

For academic integrity reason, most of the codes for this project will be hidden. If for any reason you would like to get the access to the codebase, please contact Tony at linghent@andrew.cmu.edu or lockbrains@gmail.com. For better reading experience, you may go to the link: https://hackmd.io/@Lockbrains/vcs_2_image_process

Project Overview

In the first part of the assignment we need to process the image data to produce an RGB image that looks as good as we can make it. The sensor will output RAW data from the method `sensor->ReadSensorData()`. The result is a `Width` by `Height` buffer.

Assumptions

We will make the following assumptions in this project.

1. We can assume that the pixel sensors are arranged in Bayer Mosaiic, which means the number of green pixels are twice as the number of red pixels, or blue pixels, as shown in the following diagram.

Specifically, this is because that human perception is most sensitive to green light.

2. Pixel defects (stuck pixels, pixels with extra sensitivity) are static defects that are the same for every photograph taken by the camera.

3. Defective Rows (a.k.a *Beams*) should be treated as a row of defective pixels, which also says they are static.

RAW Image Process

Now we would be able to start processing the image. It is important to make sure the steps are done in a correct order. Defective pixel correction, Beams, Vignetting should be removed before demosaicing. Noise filtering, white balance should be removed after demosaicing.

Thus, let's start with defect pixel removal.

Defective Pixel Removal

The way I am detecting the defective pixels is a hardcode method. As we know, defective pixels shows obviously deviant brightness, and will remain the same in different images. So, we can simply find them by taking a photo of the black scene and a photo of a gray scene. All the pixels with same brightness should be considered as defective pixels.

Thus, in a high level, the algorithm could be summarized as:

- Take a picture of the black scene (`black.bin`).

- Take a picture of the gray scene (`gray.bin`).

- Find all the pixels with deviant brightness (equal brightness in both scenes).

- Store them in a map.

- Use the neighboring non-defective pixel to fix it.

Taking Photo of a Black Scene

The only reliable method for us to create a sensor and populate the buffer with pixel raw data is to use the `CreateSensor()` method. However, when we use the `TakePicture()` function, we should already have our black scene and gray scene output in hand.

Therefore, I created another public function called `TakeBlackPicture()` in the class. It does almost the same thing as `TakePicture()`, except that it calls `ProcessBlackshot()` instead of `ProcessShot()` to process the image -- `ProcessBlackshot()` is simply a function that writes the RAW data from the sensor to the image directly.

Create Defective Pixel Map

Now, with the help of the gray image and black image, I can easily locate the defective pixels. First, I need to take these two images in `TakePhoto()`, so I changed the interface of this function to:

void TakePicture(Image & blackresult, Image & grayresult, Image & result);and similarly, to `ProcessShot()`, it also needs to take these two images, so we need to change its interface as well.

void ProcessShot(Image & result, Image & blackresult, Image & grayresult, unsigned char * inputBuffer, int w, int h);Once I have both of them, the next thing I need to do is to create this defective pixel map, and take this map dynamically in the image process pipeline.

The creation of the map is trivial, simply compares the pixel at the same position. If they appear as the same brightness in the black image and the gray image, it is defective.

Fixing Defective Pixels

The way I am fixing the defective pixels is just to use the average of a box window of the neighboring 3x3 pixels.

Beam Removal

The way of removing the beams actually resembles the process of removing pixels. I did the detection with black image: if the row has an average brightness that are obviously higher than the average brightness of the overall black image, it should be marked as a defective row, i.e. a beam.

Compensation Map

Just as a defective map, I maintain the beams in a compensation map. I call it a compensation map as I believe the sensor in the row are getting deficient responses for photons, so I will simply add this part back.

Fixing the Beams

In the removal part, I simply gain the brightness back to the deficient row.

Interestingly, this method fixed the majority of the beams, but created several more beams that were originally not in the image. I can't figure out the reason, so ended up with (possibly the ugliest) solution -- hardcode.

void FixDefectiveRow(int x, int y, unsigned char * inputBuffer, int w, const std::vector<float> &compensationMap)

{

// OMIT

if (compensationMap[y] != 0.0) {

if(y != 195 && y != 437 && y != 438 && y != 487 && y != 558 && y != 559 && y != 557 && y != 560)

// Other parts

}

}Guess what? This really removes all the beams.

Vignette Removal

The last step to do before demosaicing is to remove the vignette.

We will remove the vignette by boosting the brightness that are far from the center of the image, and the gain is rational to the power of the distance.

All the parameters in this function appears as magic numbers, and yes indeed they are -- these numbers are adjusted after several tests, and they proved to generate relatively good results.

Demosaic

Now we can finally move on to the demosaicing part. To get a correct demosaic process, we need to understand how to treat Bayer Mosaics.

Algorithms

Basically, pixel sensors are designed to allow only some light of certain colors to get through. Therefore, they need to be lined-up in Bayer Mosaics, and we need to interpolate for the other two channels that the sensor does not own.

Typically, for example, for a sensor that is not red, we need to interpolate the red channel for the pixel using the four red pixels next to it. The way for doing interpolation is shown in the following diagram.

This leads us to the implementation.

White Balance

Basically for white balance, we want to adjust relative intensity of RGB values so neutral tones appear neutral. The way I am doing white balance is to find brightest region of the image, and assume it is white.

Remember, since white balance is after demosaic, we can treat it as the *Post-Processing*. From now on, post-processing dominates the image process, which means all the functions takes `Image` and `Width` and `Height` as inputs.

Noise Removal

Finally, using **Median Filter**, we will be able to filter the high-frequency noises in the image, touches the face of the image, making it smoother.

Median Filter

Unlike Gaussian Blur, in Median Filter, one bright pixel doesn't drag up

the average for entire region. Simply, we are using the median of the kernel.

Too optimize the median filter algorithm, we should keep a histogram for each of the RGB channel so that we can easily return the median.

Implementation

The kernel size is determined by the variable `windowSize`.

Autofocus

In the second half of the assigment I need to implement contrast-detection autofocus.

Based on analysis of regions of the sensor (notice that `sensor->ReadSensorData()` can return a crop window of the full sensor), I need to design an algorithm that sets the camera's focus via a call to `sensor->SetFocus()`.

Set Focus

Basically, auto-focus is a process where we *automatically* set the focus to a value that *expresses* the shot best -- by express, I mean that we want to have the most crispness on the major part of the image.

The solution I am doing is to set a minimum focus and a maximum focus for the camera, and stepping from minimum focus, we find the shot with the most contrast. The contrast will be determined by applying Sobel kernel.

In this part we really only need to take care of the RAW contrast, therefore the `ProcessBlackshot()` function we made before could be applied again.

It suffices to implement the last remaining method -- `CalculateImageContrast()`.

Contrast Detection

Algorithm

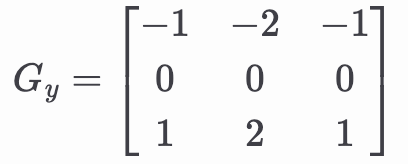

We will determine the contrast of each image by calculating using $3\times3$ Sobel kernel, which is literally

for horizontal direction and

for vertical direction.

The contrast for an image is determined by the gradient for each channel, where for the pixel at index (i,j) ∈ [-1, 1]^2, the gradient for each channel is determined by

and the contrast is simply

Comments